In this post, we’ll look at how Dataproc Serverless integrates. So, if you have some problems in your logic and restart the pipeline, you won't see already processed messages again - unless you will never retry the router tasks and only reprocess triggered DAGs which in this context could be an acceptable trade-off.Īnother point to analyze related to replayability concerns externally triggered DAGs. In my previous post, I demonstrated how one can get a Dataproc Serverless pipeline up and running from the CLI. First, our "router" DAG is not idempotent - the input always changes because of non-deterministic character of RabbitMQ queue.

That's why I will also try the solution with an external API call.Īside from the scalability, there are some logical problems with this solution. Hence, if you want to trigger the DAG in the response of the given event as soon as it happens, you may be a little bit deceived. It works but as you can imagine, the frequency of publishing messages is much higher than consuming them. In the first DAG, insert the call to the next one as follows: triggernewdag TriggerDagRunOperator( taskidtask. In the following image you can see how the routing DAG behaved after executing the code: Python_callable=trigger_dag_with_context, You can find an example in the following snippet that I will use later in the demo code: In order to enable this feature, you must set the trigger property of your DAG to None. Notice that you should put this file outside of the folder dags/. The DAG from which you will derive others by adding the inputs. The first step is to create the template file. But it can also be executed only on demand. Maybe one of the most common way of using this method is with JSON inputs/files.

Trigger airflow dag code#

External triggerĪpache Airflow DAG can be triggered at regular interval, with a classical CRON expression. The following code example uses an AWS Lambda function to get an Apache Airflow CLI token and invoke a directed acyclic graph (DAG) in an Amazon MWAA. The second one provides a code that will trigger the jobs based on a queue external to the orchestration framework. The first describes the external trigger feature in Apache Airflow. The Airflow scheduler monitors all tasks and all DAGs, and triggers the task instances whose dependencies have. Under Recent Tasks, check that the last run was successful.The post is composed of 3 parts. githubicon Top Results From Across the Web.

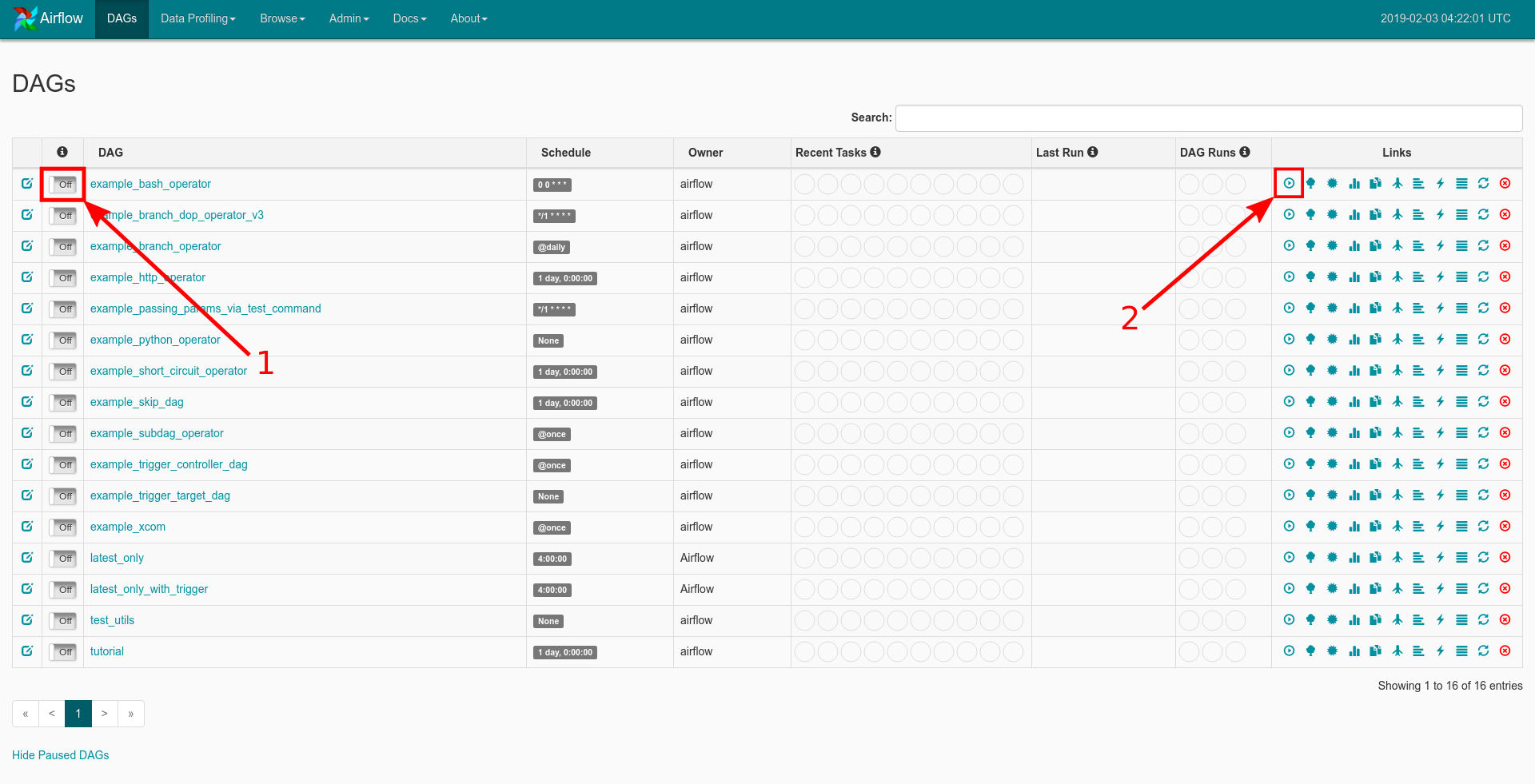

This timestamp should closely match the latest timestamp for Under Last Run, check the timestamp for the latest DAG run. On the DAGs page, locate your new target DAG in the list of DAGs.

Trigger airflow dag full#

To verify that your Lambda successfully invoked your DAG, use the Amazon MWAA console to navigate to your environment's Apache Airflow UI, then do the following: Code 4.19 shows the full DAG: import from airflow import DAG from import FileSensor -1 from. Return base64.b64decode(mydata)Ĭhoose Test to invoke your function using the Lambda console. 'Authorization': 'Bearer ' + mwaa_cli_token,Ĭonn.request("POST", "/aws_mwaa/cli", payload, headers) Payload = mwaa_cli_command + " " + dag_name Mwaa_cli_token = client.create_cli_token(Ĭonn = (mwaa_cli_token)

0 kommentar(er)

0 kommentar(er)